결과 :

cuda

------------------------------------------------

결과 :

(1797, 64)

(1797,)

------------------------------------------------

------------------------------------------------

torch.Size([1797, 64])

torch.Size([1797])

------------------------------------------------

결과 :

tensor([[1., 0., 0., 0., 0., 0., 0., 0., 0., 0.],

[0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 1., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 1., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 1., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0., 1., 0.],

[0., 0., 0., 0., 0., 0., 0., 0., 0., 1.]])

------------------------------------------------

결과 :

torch.Size([1437, 64]) torch.Size([1437, 10])

torch.Size([360, 64]) torch.Size([360, 10])

------------------------------------------------

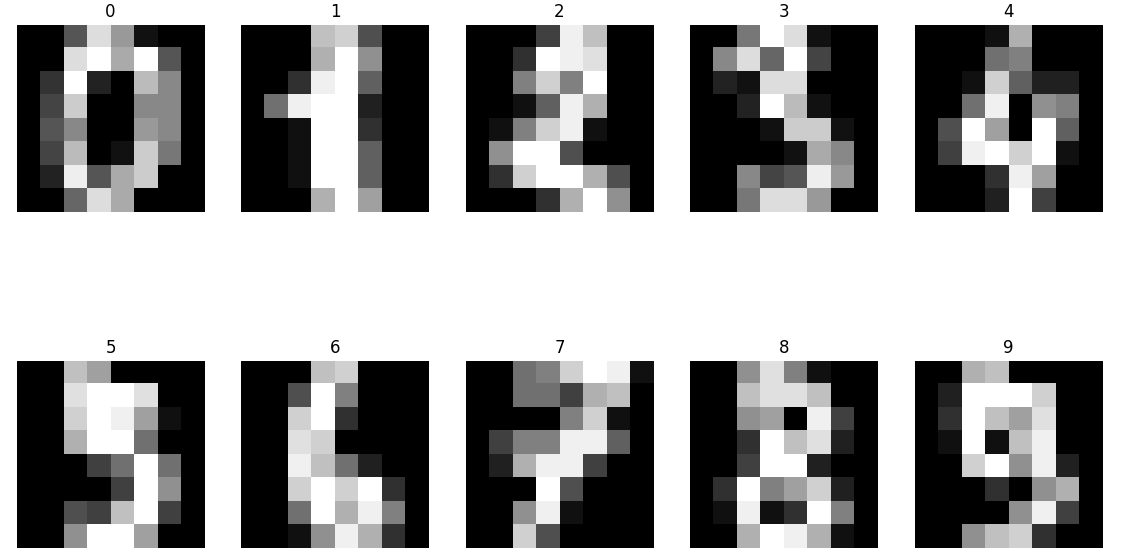

결과 :

------------------------------------------------

결과 :

Epoch 0/50 Loss: 1.973190 Accuracy: 54.77%

Epoch 1/50 Loss: 0.283309 Accuracy: 90.74%

Epoch 2/50 Loss: 0.173487 Accuracy: 94.17%

Epoch 3/50 Loss: 0.136195 Accuracy: 95.20%

Epoch 4/50 Loss: 0.109064 Accuracy: 96.98%

Epoch 5/50 Loss: 0.096432 Accuracy: 97.08%

Epoch 6/50 Loss: 0.080393 Accuracy: 97.96%

Epoch 7/50 Loss: 0.067421 Accuracy: 98.51%

Epoch 8/50 Loss: 0.071652 Accuracy: 98.15%

Epoch 9/50 Loss: 0.060040 Accuracy: 98.55%

Epoch 10/50 Loss: 0.061195 Accuracy: 98.56%

Epoch 11/50 Loss: 0.053115 Accuracy: 98.70%

Epoch 12/50 Loss: 0.055170 Accuracy: 98.76%

Epoch 13/50 Loss: 0.063474 Accuracy: 98.34%

Epoch 14/50 Loss: 0.078649 Accuracy: 97.81%

Epoch 15/50 Loss: 0.049750 Accuracy: 98.42%

Epoch 16/50 Loss: 0.035162 Accuracy: 99.17%

Epoch 17/50 Loss: 0.034921 Accuracy: 99.12%

Epoch 18/50 Loss: 0.037563 Accuracy: 99.25%

Epoch 19/50 Loss: 0.026590 Accuracy: 99.73%

Epoch 20/50 Loss: 0.025800 Accuracy: 99.59%

Epoch 21/50 Loss: 0.031414 Accuracy: 99.18%

Epoch 22/50 Loss: 0.026794 Accuracy: 99.58%

Epoch 23/50 Loss: 0.021841 Accuracy: 99.80%

Epoch 24/50 Loss: 0.020110 Accuracy: 99.80%

Epoch 25/50 Loss: 0.019018 Accuracy: 99.80%

Epoch 26/50 Loss: 0.020113 Accuracy: 99.73%

Epoch 27/50 Loss: 0.016201 Accuracy: 99.93%

Epoch 28/50 Loss: 0.018653 Accuracy: 99.73%

Epoch 29/50 Loss: 0.018847 Accuracy: 99.80%

Epoch 30/50 Loss: 0.019495 Accuracy: 99.73%

Epoch 31/50 Loss: 0.016786 Accuracy: 99.80%

Epoch 32/50 Loss: 0.015192 Accuracy: 99.71%

Epoch 33/50 Loss: 0.014195 Accuracy: 99.86%

Epoch 34/50 Loss: 0.011766 Accuracy: 99.93%

Epoch 35/50 Loss: 0.014165 Accuracy: 99.80%

Epoch 36/50 Loss: 0.011472 Accuracy: 99.86%

Epoch 37/50 Loss: 0.011846 Accuracy: 99.93%

Epoch 38/50 Loss: 0.012318 Accuracy: 100.00%

Epoch 39/50 Loss: 0.011651 Accuracy: 99.93%

Epoch 40/50 Loss: 0.013640 Accuracy: 99.66%

Epoch 41/50 Loss: 0.014027 Accuracy: 99.80%

Epoch 42/50 Loss: 0.009366 Accuracy: 100.00%

Epoch 43/50 Loss: 0.009039 Accuracy: 100.00%

Epoch 44/50 Loss: 0.009845 Accuracy: 99.93%

Epoch 45/50 Loss: 0.010043 Accuracy: 99.93%

Epoch 46/50 Loss: 0.010249 Accuracy: 99.93%

Epoch 47/50 Loss: 0.009615 Accuracy: 100.00%

Epoch 48/50 Loss: 0.008398 Accuracy: 100.00%

Epoch 49/50 Loss: 0.009546 Accuracy: 99.93%

Epoch 50/50 Loss: 0.011114 Accuracy: 99.93%

------------------------------------------------

1.7313, -0.0853], grad_fn=<SelectBackward0>)

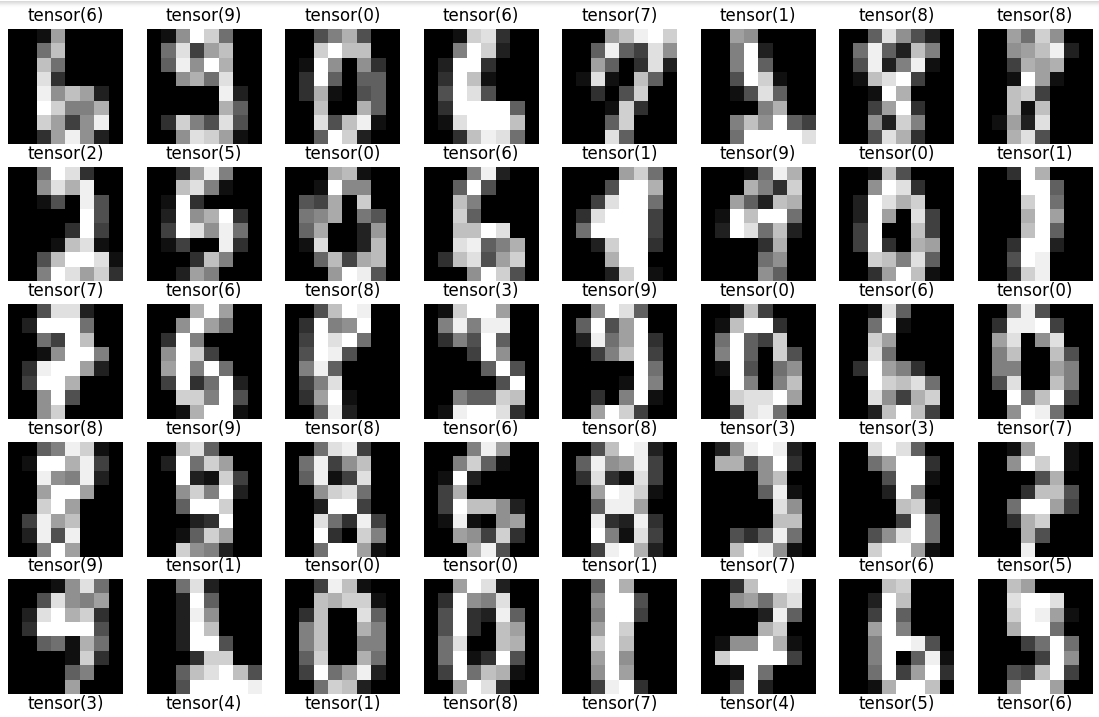

결과 :

tensor([5.7936e-06, 6.3101e-09, 7.6254e-10, 5.4668e-08, 2.9941e-08, 9.9999e-01,

7.5594e-08, 5.9151e-07, 6.6452e-07, 1.0804e-07],

grad_fn=<SelectBackward0>)

------------------------------------------------

숫자 1일 확률: 0.00

숫자 2일 확률: 0.00

숫자 3일 확률: 0.00

숫자 4일 확률: 0.00

숫자 5일 확률: 1.00

숫자 6일 확률: 0.00

숫자 7일 확률: 0.00

숫자 8일 확률: 0.00

숫자 9일 확률: 0.00

------------------------------------------------

'데이터분석' 카테고리의 다른 글

| 17.활성화 함수 (0) | 2023.06.16 |

|---|---|

| 14.파이토치로 구현한 논리 회귀 (0) | 2023.06.16 |